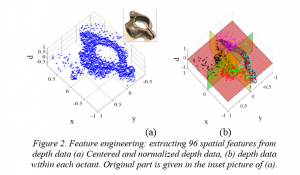

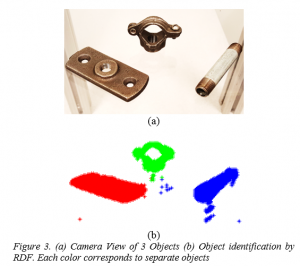

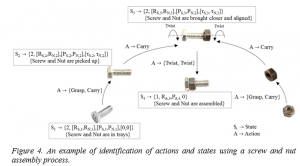

The proposed project contributes to the field of autonomy of industrial robots for advanced manufacturing. Changing requirements in a manufacturing industry demands tedious re-programming of robot. A new modality of re-training the robot using natural communication between human expert and a trainee robot called “imitation learning” is explored using state-of-the-art computer vision sensors, stochastic data modelling and high-level semantic reasoning using advanced machine learning. Data from demonstrations by a human trainer is collected with the help of the sensors and are clustered using Gaussian mixture model (GMM), which in turn will be used to train random decision forests (RDF) to identify body/hand joints, workpieces and tools. This generates prior knowledge for robot learning. Using this knowledge, the robot is able to identify and track the objects. The trajectories of the motions involved in a manufacturing task is decomposed into simpler motions which the robot can semantically classify these into higher level actions and states. These actions and states are used to autonomously define action-grammar represented by a finite state machine. The finite state machine is solved as a Markov decision process (MDP). The correspondence problem between the kinematic and dynamic spaces of the human and the robot is solved using optimal control techniques.

Researcher: Dr. Pramod Chembrammel